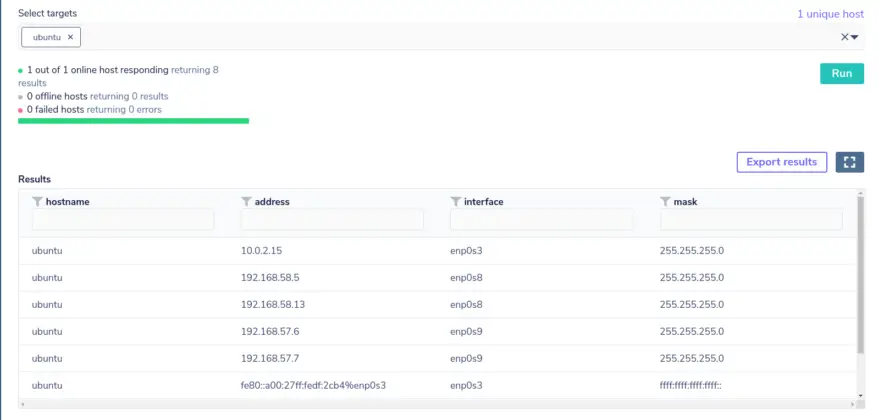

immediate remediation for FileSystemFull alerts at 11:15am.How long did it take from detection to remediation?.prod deployment started 1am UTC, filesystem-full alerts coming in 5:49am (in slack channel, not via pagerduty), SRE oncall starting to take action 12:15pm.How long did it take from the start of the incident to its detection?.Also, FilesystemFullSoon alerts are not going to pagerduty, but disks filled so fast, that we were at risk to have them filled up before SRE oncall was taking notice. The existing alarming worked to notify us about systems coming into critical state, but we didn't have specific alerts or monitoring for osqueryd misbehaving.Investigation showed issues with the embedded rocksdb and high CPU usage. a few hours after prod deployment, SRE oncall was paged for root disk filling up on git-servers (because of growing osqueryd data dir).Overall osqueryd CPU consumption by env (we are missing the week before as we switched to thanos longterm storage last week): How many customers tried to access the impacted service/feature?.How many attempts were made to access the impacted service/feature?.As the cpu usage of osqueryd was isolated to 1 core on each server there was no measurable slowdown of any service but it might be that it was contributing to slower response during times of higher load on the site.preventing them from doing X, incorrect display of Y. How did the incident impact customers? (i.e.mostly security and infrastructure teams having to spend time finding and fixing the root cause and preventing further impact.60 cores overall in the production fleet.

CPU load and disk IO on most machines went up, consuming an equivalent of ca.Minutes downtime or degradation : N/A Impact & Metrics

Team attribution : infrastructure/security

0 kommentar(er)

0 kommentar(er)